|

| Figure 1. Safety vs. Risk (Long, 2017) |

The

DJI Phantom 4 Pro is a commercially available sUAS used by hobbyist and

professionals alike for aerial video and photography. For the purpose of this discussion we will

focus on the flight characteristics and safety features of the Phantom 4. It is

capable of autonomous flight and obstacle avoidance (Phantom 4, 2017).

The Phantom 4 has built in GPS, barometer, forward

vision, downward vision, rearward vision, two ultrasonic sensors, and infrared

systems on both sides. These systems are

combined for obstacle avoidance and navigation and can be used independently if

needed. For instance, if flying indoors, without GPS signal, the vision system

will hold a stable hover without inputs from the operator (Phantom 4, 2017).

There are three Return-to-Home (RTH) modes of flight;

Failsafe RTH, Smart RTH and Low Battery RTH. For all modes obstacle avoidance

is active and the Phantom will adjust altitude if needed. Before any flight a

“home” point is established for the Phantom 4, this is the point of launch. Failsafe

RTH will enable whenever link with the controller is lost for 3 seconds, it

will retrace its route of flight back home, ensuring a safe route of flight. Smart

RTH is user activated with a button on the controller and is used to automatically

fly back to the home point. Low Battery RTH is automatically engaged when the

battery is too low for continued flight. The Phantom will internally calculate

the time of flight required to return to home at all times, when the battery is

low enough continued flight may affect safe flight Low Battery RTH engages and

flies the Phantom home (Phantom 4, 2017).

The

Phantom visually scanned and remembers the terrain when the home point was

established. During any RTH feature if the landing terrain doesn’t match the

terrain in the memory the Phantom will hold hover and wait for user input to

confirm landing. During any landing the Phantom is visually scanning the

landing zone, if the Phantom determines it isn’t’ suitable it will

automatically hold a hover awaiting confirmation from the operator to land. It

will hold that hover till confirmed to land or until the battery is too low, at

which point it will land (Phantom, 2017).

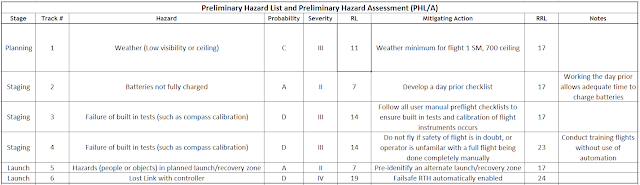

An Operational Risk Management (ORM) assessment was

completed for the Phantom 4 in order to ensure safe flight, and mitigate any

risks identified. This includes a

Preliminary Hazard List (PHL), Preliminary Hazard Assessment (PHA), and

Operational Hazard Review and Analysis (OHR&A).

A PHL is a “brainstorming tool used to identify initial

safety issues early in the UAS operation” (Barnhart, Shappee & Marshall,

2011). Once a hazard is identified the probability

and severity need to be established. Probability would be frequent (A),

probable (B), occasional (C), remote (D) or improbable (E). The severity

is listed as catastrophic (I), critical (II), marginal (III) or negligible (IV)

(Barnhart, Shappee & Marshall, 2011).

A PHA is completed after the PHL. A PHA is where we ask

what can be done to reduce or eliminate the hazard identified in the PHL. In

regard to probability we want to reduce the or eliminate the possibility of

occurrence, to decrease the exposure to the risk. See Figure 2 for an example

of a PHL/A for Phantom 4 operations. It should be noted this is not an all-inclusive

list, this is for illustration purposes. An all-inclusive list would be

developed and include every possible risk for a specific flight. For example,

if using the Phantom to record a local charity run there may be additional

factors such as over flight of a crowd or public space, or perhaps other sUASs

in the area. If recording wildlife there could be risks of interaction with the

wildlife, loosing the Phantom in a body of water, or airspace restriction if

the land has any particular designation. If weather is a risk it would be

seasonally dependent and where the flight is occurring, mountains versus an

open plane.

Figure

2. PHL/A

The

OHR&A “is used to identify and evaluate hazards throughout the entire

operation and its stages (planning, staging, launching, flight, and recovery)” (Barnhart, Shappee & Marshall, 2011). Risks identified in the PHL/A may also appear

in the OHR&A, but can include additional risks such as human factors. The

mitigating action from the PHL/A is used as the Action Review in the OHR&A

tool. If the action was effective at mitigating risk no change is needed,

however if it is not a new mitigating action needs to be developed. See Figure 3

for an example of an OHR&A for a flight.

Figure

3. OHR&A

An

ORM serves two purposes. First is to provide a “quick look at the operation

before committing to the flight activity (a go/no-go decision)” (Barnhart, Shappee & Marshall, 2011). The second is to “allows safety and

management of real-time information needed to continually monitor the overall

safety of the operation” (Barnhart, Shappee & Marshall, 2011). This is meant to be an aid in deciding to

conduct the flight, not the only means to deciding. See Figure 4 for an

example. If anything changes during the flight, such as unexpected people show

up to your location or weather is not as planned for, the risk would need to be

updated appropriately in real time.

Figure

4. ORM

References

Long, R. (2017, December 13). The Great Safety is a Choice Delusion. Retrieved February 25, 2018, from https://safetyrisk.net/the-great-safety-is-a-choice-delusion/

Phantom 4 User Manual. (2017, October). Retrieved

February 26, 2018, from https://dl.djicdn.com/downloads/phantom_4_pro/20171017/Phantom_4_Pro_Pro_Plus_User_Manual_EN.pdf

Barnhart, R. K., Shappee, E., & Marshall, D. M.

(2011). Introduction to Unmanned Aircraft Systems. London, GBR: CRC

Press. Retrieved from http://www.ebrary.com.ezproxy.libproxy.db.erau.edu